We are presenting our paper On Futuring Body Perception Transformation Technologies: Roles, Goals and Values at the 26th International Academic Mindtrek conference, between the 3rd and 6th of October 2023 in the Nokia Arena, Tampere, Finland. We will be part of Session 6: Fictional, Speculative and Critical Futures, on Thursday, October 5th, from 13:45 to 15:10. See here the full program.

The paper’s abstract:

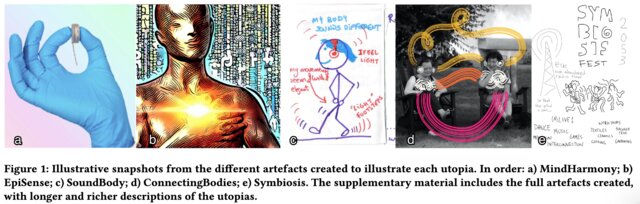

Body perception transformation technologies augment or alter our own body perception outside of our usual bodily experience. As emerging technologies, research on these technologies is limited to proofs-of-concept and lab studies. Consequently, their potential impact on the way we perceive and experience our bodies in everyday contexts is not yet well understood. Through a speculative design inquiry, our multidisciplinary team envisioned utopian and dystopian technology visions. We surfaced potential roles, goals and values that current and future body perception transformation technologies could incorporate, including non-utilitarian purposes. We contribute insights on such roles, goals and values to inspire current and future work. We also present three provocations to stimulate discussions. Finally, we contribute methodologically with insights into the value of speculative design as a fruitful approach for articulating and bridging diverse perspectives in multidisciplinary teams.